By the time I'm writing this article, you surely may have heard of ChatGPT. This "new AI" made so many waves that I couldn't resist digging through it and writing something about it. But rather than jumping into the hype train of ChatGPT and OpenAI, I want to contribute to the discussion with what I learned these past few weeks.

This article will cover KoboldAI, for the most curious of you, I recommend you check out its Github page and give it a try. For those who want to learn more, follow me along in this article.

What is KoboldAI ? 🤔

KoboldAI is as its creators describe it "Your gateway to GPT writing". It's a browser-based front-end for AI-assisted writing, storytelling and dungeon adventures. In a way, it's like ChatGPT but more advanced with settings you can fine-tune as you will. The best part of all of this is that you can run it on your PC locally.

Why have you've never heard of KoboldAI 🧐

Machine learning and text-to-text generation is nothing new. It's just that with OpenAI's ChatGPT popularity, people started to be interested in this field. You may recall 2019 articles talking about GPT-2 and how effective it was. In the case of GPTs (Generative Pre-trained Transformers), things were evolving a lot, it's just that the mainstream was not interested in this.

By now, everyone talks about ChatGPT. I've never seen something like this, even in restaurants, cafés and during my work breaks, I overhear people talking about it. What I think made it so popular is how accessible it is to the mass. You need an account and you start writing to this mysterious entity like a normal chatbot. Almost everyone knows how to use a normal chat app and how to write. This makes the barrier of entry very low and people can try almost anything with it.

This takes us back to KoboldAI. People don't know about it because it's serving a niche purpose; running GPT models locally on your hardware to write stories and play games. The other reason is purely technical; you're limited to what you can run on it. But let's not get ahead of ourselves, we'll get a little more technical later.

How to run KoboldAI on your PC? 💻

You can head to this section where you have clear instructions on how to install it on your PC.

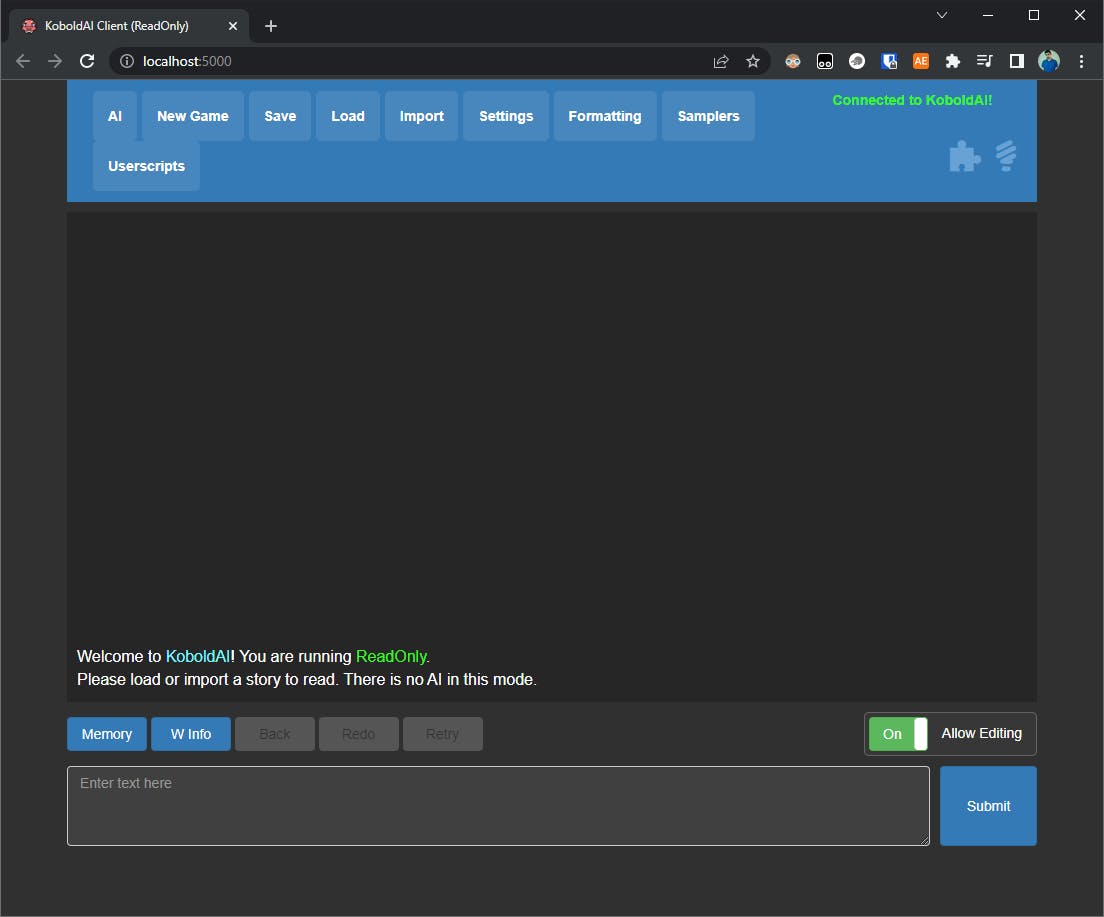

Once done, start KoboldAI. You'll see a console app then your browser will open a new tab under `http://localhost:5000/` with a nice web UI as follows:

Now that the interface is ready, let's get to the crusty part of it. As you may have noticed, for now, the prompt says the following:

Welcome to KoboldAI! You are running ReadOnly.

Please load or import a story to read. There is no AI in this mode.

As it says, no AI is running right now, we need to load a model to have at least something to interact with. To do so, head to the AI button, you'll see a popup saying "Select A Model To Load" and a list of entries. Select the option "Untuned GPT-Neo/J" then "GPT-Neo 125M" and finally click the Load button. Depending on your internet speed and processing power, it may take some time to download the model and prepare it for you, but once done. The prompt will say the following:

Welcome to KoboldAI! You are running EleutherAI/gpt-neo-125M.

Please load a game or enter a prompt below to begin!

Here is a GIF that illustrates the whole process:

You can now try for example to write a partial phrase and press Enter or click the Submit button to see what the AI will come up with to finish it. For example, I typed "Chocolate ice cream is" and the AI filled in the rest with:

Chocolate ice cream is a popular holiday dessert. It's a simple ice cream with a sweet, savory flavor. Chocolate ice cream is a perfect treat for the holidays. The ice cream is made from chocolate, and is a great way to dress up your holiday meal.

In order to make chocolate ice cream, you need to make the chocolate stick.

Note: Your output will not be the same as mine.

Now let's jump right into the next section and understand what happened.

How does KoboldAI work? ⚙

After inputting your prompt, KoboldAI will use the model it has been provided (in this case GPT-Neo/J 125M) to finish your sentence. It will do what's called an inference; it's when we use our model to generate new text from the initial prompt by predicting what word will come next while taking into account the coherence and style of the text.

Let's try to check out how KoboldAI did this:

In the top bar, click the button

Settings.Tick the toggle Probability to On.

Click again on

Settingsto retract the bar.Now click on the button

New Game,Blank storyand confirm to start over.

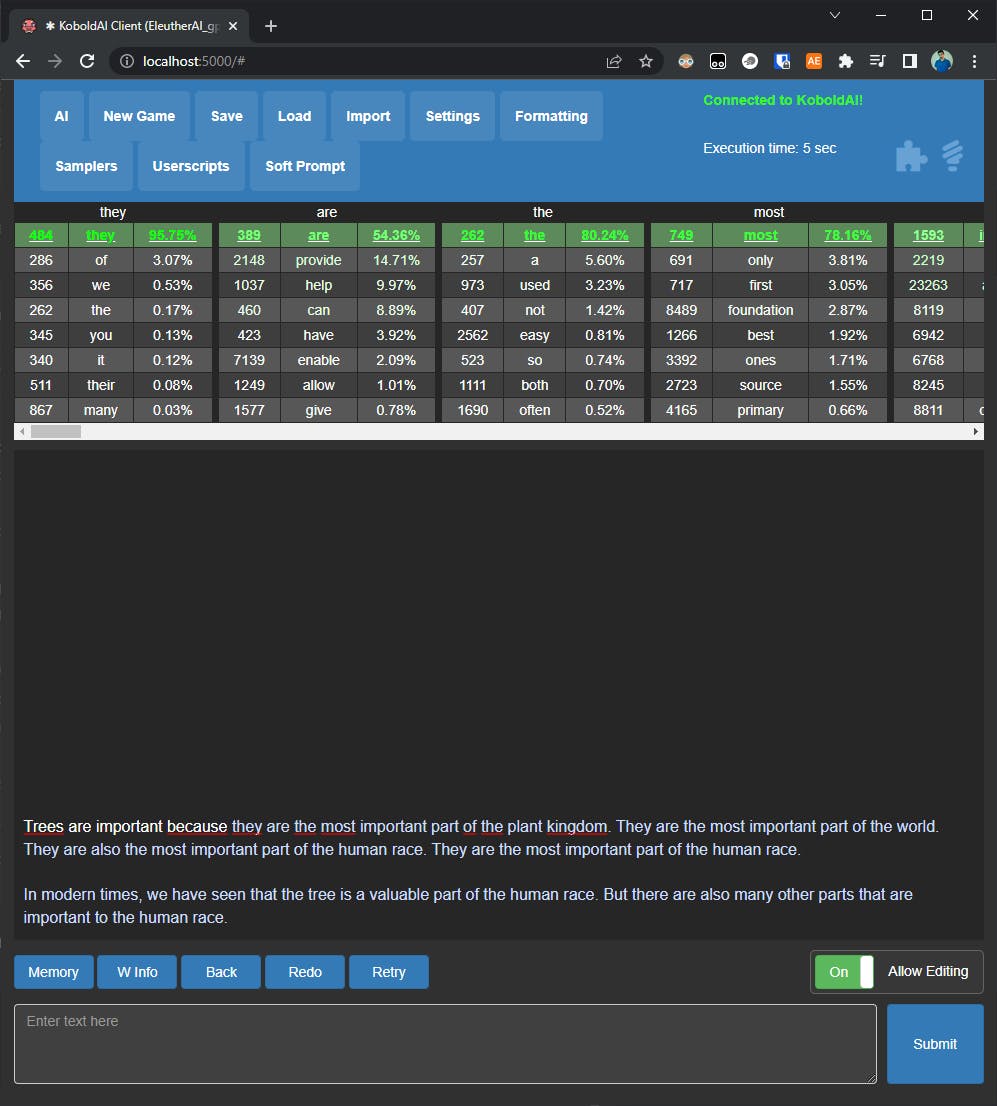

Now try to run another prompt, for example, "Trees are important because". Which resulted in the following output for me:

Trees are important because they are the most important part of the plant kingdom. They are the most important part of the world. They are also the most important part of the human race. They are the most important part of the human race.

In modern times, we have seen that the tree is a valuable part of the human race. But there are also many other parts that are important to the human race.

You may have noticed that now, the web UI also shows a list of tables with words and percentages like the following screenshot:

As you may have noticed for my generated text (and maybe for you) sometimes the text generated does not make sense or you end up with a lot of repetition. This is completely normal. As you can see, the AI is following up with terms that it deems probable to occur after each word, it will continue doing so until it reaches the maximum number of words (or tokens) it's supposed to generate.

Let's again head to the Settings and disable the Probability toggle. Locate the Gens Per Action slider and raise it to its maximum value: 5. Start a new game and input for example "The human race is".

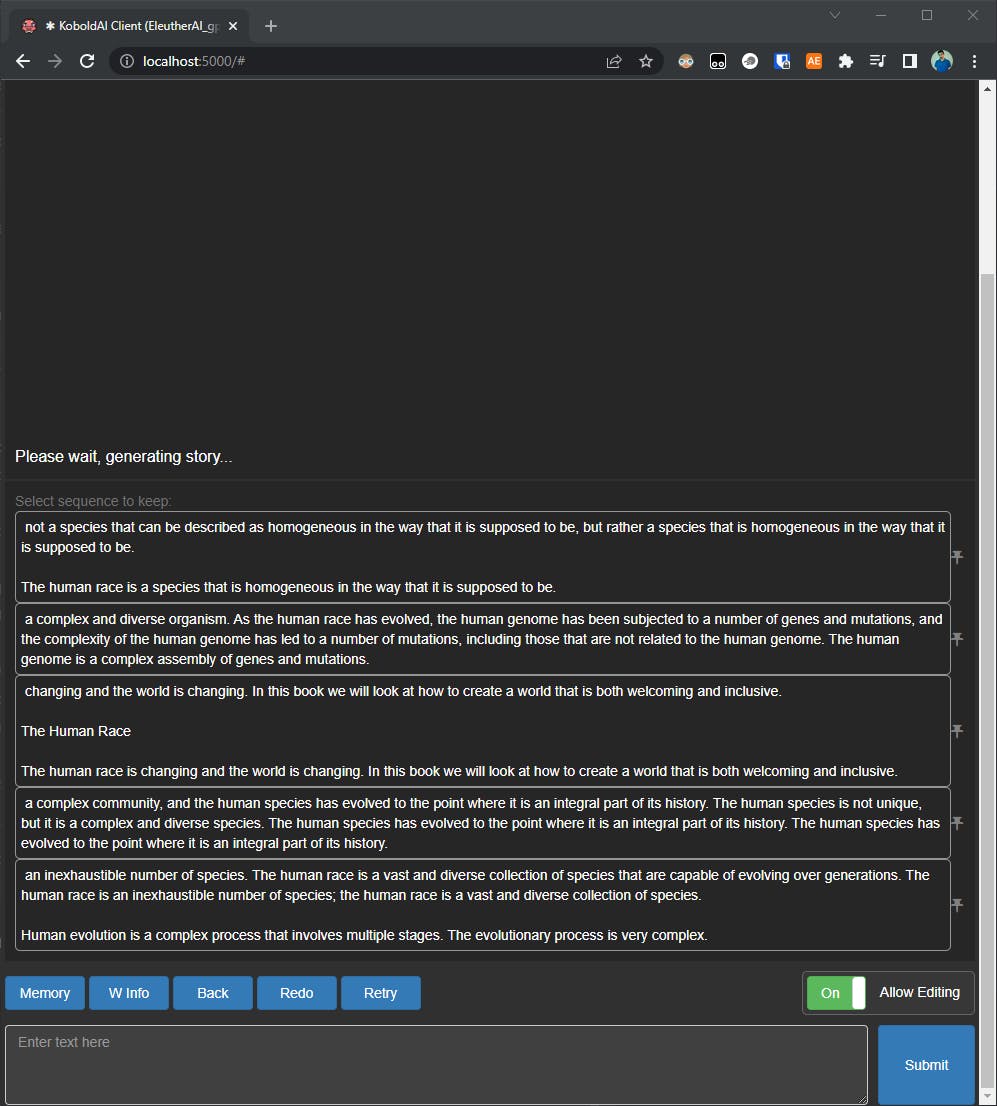

You may have noticed that the app took more time to generate the prompt and that it finally outputted 5 sequences. Below is a screenshot of what I ended up with:

What we did here is that we asked the AI to generate 5 results that we can then pick and continue our story with.

Chat vs Adventure vs Story modes 🤹♂️

Now that we have an idea of how KoboldAI works, let's understand the modes that it provides for us and how we can play around with them.

Chat mode 💭

Under Settings tick the toggle Chat mode (don't forget to reset the Gens per action to 1). You will notice now that a text field appeared over the prompt with a prefilled You text. This will be your nametag during the chat. Now, you can use KoboldAI like ChatGPT. The only prerequisite is to write a context prompt to get started. For example:

Jack is a software engineer. Jack likes to work on new technology and is always curious to discover new things. The following conversation is between Jack and You.

You: Hello, Jack!

Jack: Hi, how are you?

You: I'm good, how about yourself?

This will help the AI have some context to start with. Of course, you can change your name (and change it in the small text field accordingly). The more you add context, the better it will be so that the bot will be more consistent.

Here's a small capture of my discussion with the bot:

Now you may have noticed that the bot is not making too much sense in what it's saying. If you gave it a try locally, you even may have received some inconsistent answers. This is completely normal. We're going to quickly go through the two other modes and then we'll try to understand why the AI is so limited.

Adventure mode ⚔

Adventure mode is mainly why KoboldAI exists in the first place. This mode lets you play in text mode and you can practically do whatever you like. Of course, provided you respect some guidelines to have consistent gameplay.

To enable Adventure mode, head to Settings and toggle off Chat mode then toggle on Adventure mode. Now head to the AI button, a popup will display. Choose the folder "Adventure Models" then "Adventure 125M Mia" and click Load. You'll have to wait for the model to download, meanwhile, keep an eye on the console app to spot potential errors just in case. Once it's loaded, you can click New story button and Blank story just to start with a blank slate. Finally, just like chat mode, you have to enter a context base, here's a small paragraph to get you started:

Conan is lost with his trusty friend Aaron in the wild woods of northem lands. It is starting to get dark and they are looking for a place to set a campfire during the night.

The AI will pick up from there and generate more text accordingly. You can then play like in a dungeon text-based game and the AI will be your one-of-a-kind game master. Here is a screenshot of the context phrase I quoted below then my prompt highlighted in blue text:

I'm not good at myself text-based dungeon games so I can't vouch for it. But you get the idea. Let's quickly talk about story mode.

Story mode 🧾

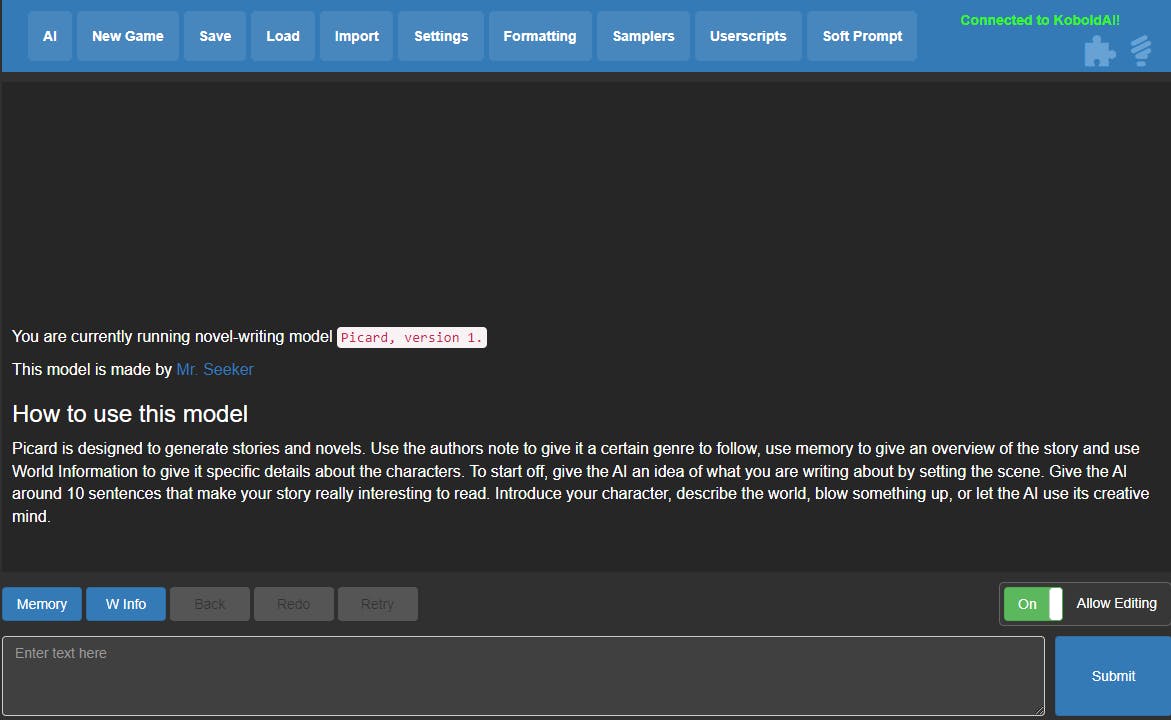

Finally story mode. You might have tried toying around with ChatGPT to generate a story. KoboldAI has you covered for this. You'll first need to head to Settings and untick the Adventure mode and Chat mode. This will switch you to the regular mode. Next you need to choose an adequate AI. Click the AI button and select "Novel models" and "Picard 2.7B (Older Janeway)". This model is bigger than the others we tried until now so be warned that KoboldAI might start devouring some of your RAM. Allow it some time to load and once it's done, click New Game and select "Blank story".

Kobold will show you the following:

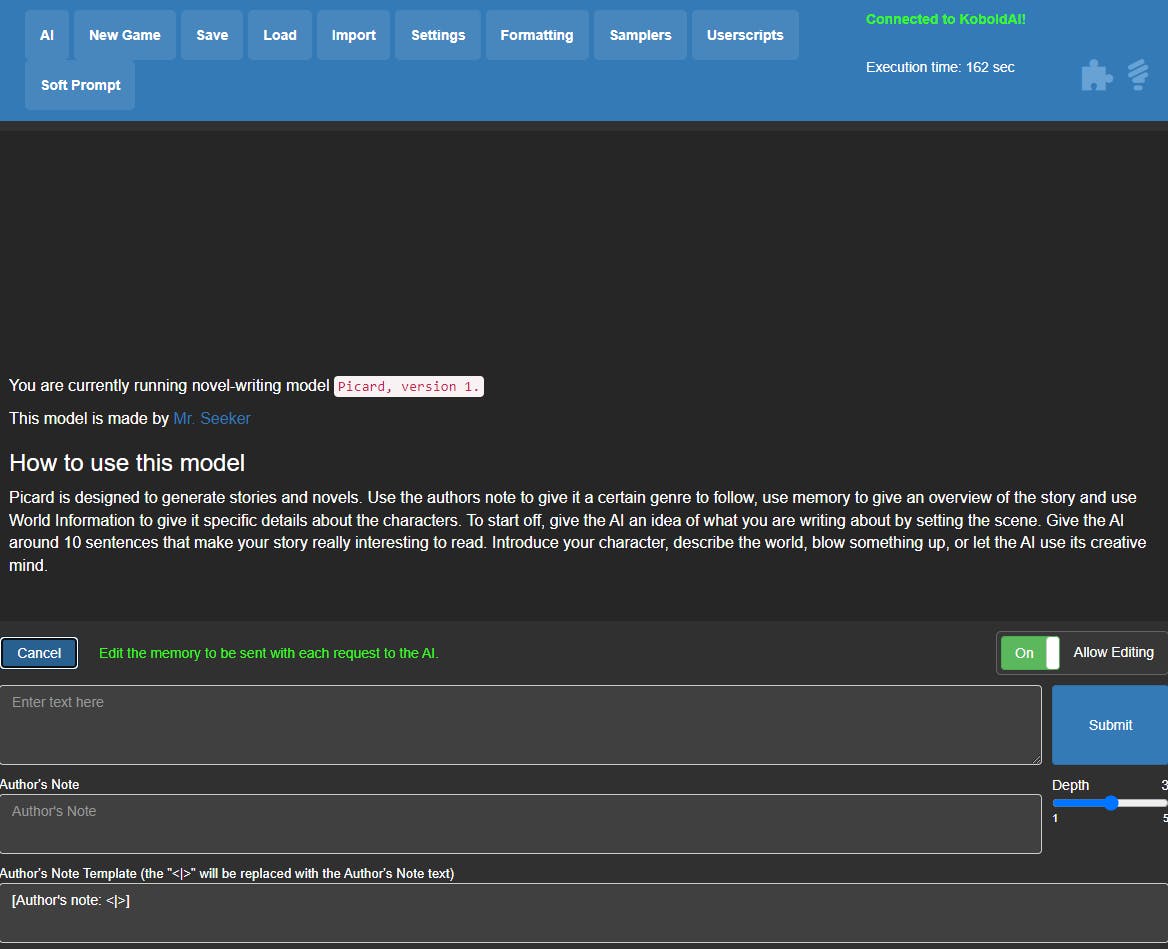

Click the Memory button and you'll see two new fields appear under your input textbox:

The first text box is the memory. You should set it with as many details and context as you can. This helps the AI have something to build over. For the Author's note, you can ask it for example to replicate Stephen King's style or Fyodor Dostoyevsky 👀.

Let's check out this example to get ourselves started. After filling in the memory field and author's note, click the Submit button, the UI will show the prompt text box and the usual Submit button. Copy the prompt and hit that Submit button.

Side note: Depending on your configuration, the prompt might take some time and use a lot of GPU/CPU, it's completely normal.

The AI will complete the paragraph and you can carry on by giving it another phrase to kick off the text generation. You can guide it through for example to shape the story the way you like it.

Now that we're done understanding all those modes. Let's try to understand what is happening and most importantly why it's very different from "ChatGPT".

Models, hardware and AI magic ✨

This subsection will cover two main topics, models and hardware. As it's a very complicated subject, I'm trying my best to convey the information as simply as I can. If you feel that it's not enough, be sure to visit the links and references in each paragraph.

Understanding models 📦

After running KoboldAI, we went through and loaded an AI, "GPT-Neo/J 125M" to be precise. This is what is called a "pre-trained model". This model for example is provided by EleutherAI, a collective of AI research volunteers who are aiming to provide large language models in an open-source manner.

"GPT-Neo/J 125M" is a lightweight pre-trained transformer model that your machine just ran on its CPU/GPU to complete the sentence you started with "Chocolate ice cream is" previously.

KoboldAI is an interface that lets you quickly load this model and interact with it via its web interface.

In the case of ChatGPT, it's almost the same thing. Of course, the interface is different and the model is way bigger; gpt-davinci-003.

To give you an idea of the scale of gpt-davinci-003 vs GPT-Neo/J 125M:

gpt-davinci-003 is supposedly trained on 175B(illion) parameters.

GPT-Neo/J 125M is trained on 125Million parameters as its name says.

As you can see, the scale is not even close. But you need to understand this crucial information to avoid thinking that it's as simple as just running software, throwing a model on it and calling it a day.

Small models are very fast to run on modest hardware, but as you experienced, sometimes the AI can spit some weird outputs (Note that even ChatGPT can go haywire like in this example). What's the solution? Run bigger models. Bigger models require more hardware. Let's jump into the next section to talk about it.

Hardware makes all the difference 💾

Let's try to understand what's going on with ChatGPT just to get an idea of the hardware scale. Sources are sparse but as far as I could understand from this article we're talking about at least 1 to 8 Nvidia A100 GPUs which are around 3$/hour/unit in Azure cloud. These GPUs are specifically made for AI and have 80GB of VRAM each. I hope this helps you appreciate the sheer scale of gpt-davinci-003 and why -even if they made the model available right now- you can't run it locally on your PC.

You can of course run complex models locally on your GPU if it's high-end enough, but the bigger the model, the bigger the hardware requirements. This is why a lot of people privilege cloud computing in this case. Even in the case of cloud computing, numbers (and billing) can quickly spiral out of control.

Should I run KoboldAI locally? 🤔

You might wonder if it's worth it to play around with something like KoboldAI locally when ChatGPT is available. And to be honest, this is a legitimate question. But I think that it's an unfair comparison. KoboldAI is an open-source software that uses public and open-source models. Smaller models yes, but available to everyone. This lets us experiment and most importantly get involved in a new field.

Playing around with ChatGPT was a novelty that quickly faded away for me. But I keep returning to KoboldAI and playing around with models to see what useful things it can do. For me, it represents an easy entry into the world of AI, deep learning and a lot of Python fiddling. I've wasted so many nights just fiddling around with code and tools that I barely understood and I love it!

Honestly, I would advise you to install it, play around and mess up with it. I have a strong belief that generative AI is the future and you should pay attention to it.

Wrap-up 📦

In this article, we discovered KoboldAI, quickly understood what it does, installed it on our computer and played around with some settings. We've also understood how each mode works and broadly how it uses models to generate text output.

I hope you've learned something new from me today. If you feel that I missed a detail or wrongfully explained something, please correct me in the comments.

Cheers!

Photo by @Erdenebayar from Pixabay.